Deepfake Audio Is Real. Beware Of Scam Calls With AI-Generated Voices Of Your Loved Ones Doing The Rounds

Deepfake Audio Is Real. Beware Of Scam Calls With AI-Generated Voices Of Your Loved Ones Doing The Rounds

[ad_1]

Ever since artificial intelligence (AI) started permeating into the masses over the past couple of years, bad actors have steadily gained access to a new tool to scam unsuspecting citizens, luring them with false baits and coercing them into taking harmful actions. While Deepfake photos and videos made their rounds on social media, much to the ire of the Modi Government which is proactively campaigning to warn people against such scams, it appears now that Deepfake audio is very real as well, and one concerning case has helped bring a much-needed spotlight on the issue and several similar cases as well.

On Monday (March 11), Kaveri Ganapathy Ahuja (who has over 69,000 followers on X), chronicled in a curious yet cautionary tweet thread a recent experience where bad actors impersonating police officers used seemingly Deepfake audio of her daughter to execute what looked like a scam.

Here’s What Went Down

As per Ahuja’s tweet (posted from her handle @ikaveri), when she picked up a call from someone impersonating a police officer, connecting with her from an unknown number. This person began the conversation by asking if Ahuja knew where her daughter was, claiming that she had been put under arrest and it was she who shared Ahuja’s contact number with this person.

When Ahuja enquired why her daughter was arrested, the person said that she was among four girls who were arrested by the police for “recording the son of an MLA in a compromising position and then blackmailing him.”

…him my number. “aap ki beti ko arrest kar liya gaya hai”.

I felt actually relieved at that point because initially I felt she could have been in an accident or hurt.

“aap ki beti aur 3 aur ladkiyon ne MLA ke bete ko compromising position mein record kar ke blackmail..

— Kaveri 🇮🇳 (@ikaveri) March 11, 2024

Suspecting that this could be a scam call, Ahuja immediately turned on the phone recording and asked to speak with her daughter. At this point, this person allegedly called out to someone, asking for the daughter to be brought to the phone.

“To my horror, a recording was played to me. ‘Mumma mujhe bacha lo, mumma mujhe bacha lo.’ The voice sounded exactly like my daughter’s but that’s not the way she would have spoken,” Ahuja said.

The police officer impersonator then said that the “victim” was “ready to compromise if he is compensated.” The only other option is to come to the police station and settle things there.

I asked him to let me speak do my daughter properly. He got all angry and rude.

We are taking her away then, he said.

Ok, take her away then, I told him.

And I laughed.

He cut the call.And that’s how they tried to scam me today. The end.

— Kaveri 🇮🇳 (@ikaveri) March 11, 2024

When Ahuja asked to speak with her daughter “properly,” the person allegedly got angry and threatened to “take her away.” At this point, perhaps being certain that this was indeed a hoax call, Ahuja told this person to take her away, laughed, and disconnected the call.

Ahuja also shared a screenshot of the phone number from which the call was made.

Yes, I do. pic.twitter.com/wIpxGUXCBy

— Kaveri 🇮🇳 (@ikaveri) March 11, 2024

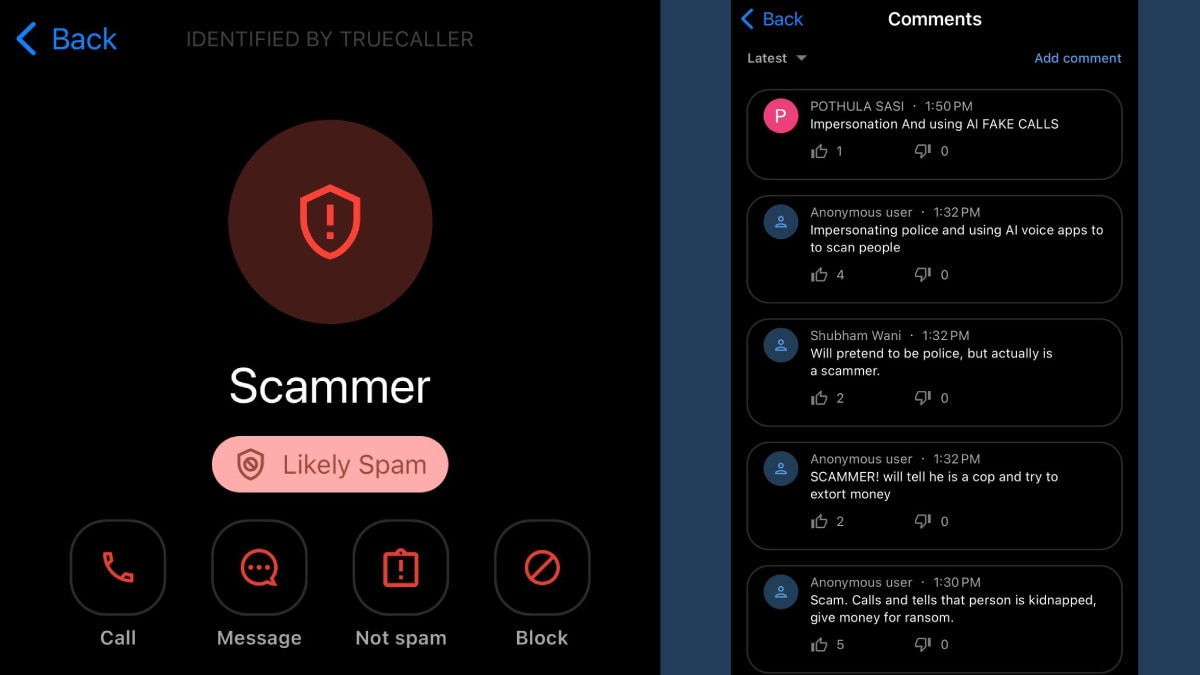

We at ABP Live looked up the number on Truecaller, and it was marked as ‘Scammer’ (safe to speculate that the demarcation was done by someone who had a similar encounter). In the Comments section, there were four comments made by an Anonymous User (at around 1:32 pm today), explaining a similar account as given by Ahuja in her tweet. There was also another comment by a user named Pothula Sasi who claimed that these were “AI FAKE CALLS.”

When another user, Sunanda Rao-Erdem, asked on the tweet thread if all these were acts of AI, Ahuja simply replied this:

Yup.

— Kaveri 🇮🇳 (@ikaveri) March 11, 2024

As the tweet thread went viral, other users also posted similar experiences.

Here is the number, kuch % log fans hi jate hain inke jal me isliye ye try karte rahte hain. But inke pas itni detail kaha se aati hai ye chinta ki bat hai pic.twitter.com/ZSoO04tgUr

— Sergeant J Sansanwal (@jssansanwal) March 11, 2024

Happened to us also a few weeks back. My dad got a call that I’ve been arrested because I got caught with some girl or something then they repeated the same story. Gladly, my dad also knew it was a scam and he didn’t react to it.

— Ujjwal Pandey (@ujjwalpandey07) March 11, 2024

Omg this happened to a friend back in Assam. Got a call late night from a “cop” who asked if he has P’s dad, P who studies here and lives at this address in PG (across the country). And then told him she’s been arrested for possession of drugs.

— . (@TandooriCutlet) March 11, 2024

Similar thing happened with my mum. Though my brother lives out of the country, the tone in which the self proclaimed ASI threatened my mother about his whereabouts, she was really scared. It was a WhatsApp call and the DP had a man wearing police uniform.

— iaamshe (@wanderinwhiner) March 11, 2024

There is no information if this case was registered by Ahuja with the police or the Cyber Crime Unit, but it is highly advisable that in case you also fall victim to such a situation, you should register a complaint as soon as possible.

Now, there is also no way to figure out if the voice Ahuja heard on the phone was indeed a deepfake recording of her daughter or just a recording of a young girl designed to dupe concerned listeners.

What is even worrying is that if this indeed was the work of Deepfake technology, how did the bad actors gain access to her original voice sample in the first place? Ahuja claimed later that her daughter does not “put up any [social media] posts ever.”

She doesn’t put up any posts ever. https://t.co/G9J60KPVml

— Kaveri 🇮🇳 (@ikaveri) March 11, 2024

In cases of Deepfake audio, scammers will need a sample of the original voice to feed it into their AI tools, to create a synthetic copy and make it say whichever phrase they want.

For now, readers are advised to stay cautious and steer clear of an unknown number as much as possible.

ALSO READ: How Audio Deepfakes Are Adding To The Cacophony Of Online Misinformation

Beyond Fake Calls

It appears that just like doctored photos and videos, the impact of Deepfake video reaches far beyond phone calls. Back in April last year, two purported audio clips featuring Dravida Munnetra Kazhagam (DMK) lawmaker Palanivel Thiaga Rajan emerged. In these recordings, the former finance minister of Tamil Nadu allegedly levelled accusations of corruption against his party members while also offering praise to the opposition party, Bharatiya Janata Party (BJP).

Despite Rajan’s dismissal of the recordings as being “fabricated,” a report from the non-profit publication Rest of World indicated a divergence of expert opinion. While there was disagreement regarding the authenticity of the first clip, there was a consensus that the second clip was genuine.

In another case, in September 2023, just ahead of Slovakia’s general elections, a deepfake audio featuring Michal Šimečka, the chairman of the Progressive Slovakia party, surfaced. The fabricated recording depicted Šimečka allegedly discussing a nefarious scheme to manipulate the election results. This deceptive audio quickly gained traction among Slovak social media users, amplifying fears of electoral fraud and manipulation.

Similarly, in the UK, a counterfeit audio clip purportedly featuring Keir Starmer, the leader of the Labour Party, surfaced, depicting him engaging in abusive behaviour towards a staff member. This falsified content garnered millions of views, raising concerns about the potential to influence public opinion ahead of political events.

‘Much Easier To Make A Realistic Audio Clone’

The ease with which realistic audio clones can be created poses significant challenges, particularly given the widespread use of messaging apps for audio sharing. Sam Gregory, executive director of the non-profit organisation Witness, which employs video and technology to advocate for human rights, highlighted the difficulties in detecting synthetic audio during an interview with Logically Facts.

“Compared to videos, it is much easier to make a realistic audio clone. Audio is also easily shared, particularly in messaging apps. These challenges of easy creation and easy distribution are compounded by the challenges in detection — the tools to detect synthetic audio are neither reliable across different ways to create a fake track or across different global languages, nor are journalists and fact-checkers trained with the skills to use these tools,” Gregory told Logically Facts. “AI-created and altered audio is surging in usage globally because it has become extremely accessible to make — easy to do on common tools available online for limited cost, and it does not require large amounts of a person’s speech to develop a reasonable imitation.”

Gregory added, “On a practical level, audio lacks the types of contextual clues that are available in images and videos to see if a scene looks consistent with other sources from the same event,” which makes it even harder for the common ear to not notice a Deepfake audio easily.

While the age of AI has its set of efficiencies and profits, it is undeniable that just like the Atomic Bomb designed by J. Robert Oppenheimer-led Manhattan Project back during World War II, technology could prove to be a relentless enemy if it is exposed to mal intentions.

While global leaders, including Prime Minister Narendra Modi, raise a strong demand for global regulations when it comes to AI and its associated banes, it is up to us to do our best to remain vigilant and exercise utmost caution until this beast is tamed.

[ad_2]